Configure GigaVUE Fabric Components in GigaVUE-FM

After configuring the Monitoring Domain, you will be navigated to the OpenStack Fabric Launch Configuration page. In the same OpenStack Fabric Launch Configuration page, you can configure the following fabric components:

In the OpenStack Fabric Launch Configuration page, enter or select the required information as described in the following table.

|

Fields |

Description |

|

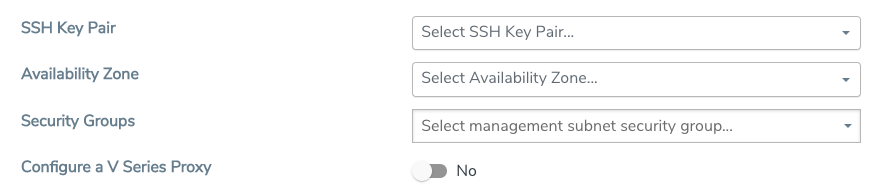

SSH Key Pair |

The SSH key pair for the UCT-V Controller. For more information about SSH key pair, refer to Key Pairs. |

|

Availability Zone |

The distinct locations (zones) of the OpenStack region. |

|

Security Groups |

The security group created for the UCT-V Controller. For more information, refer to Security Group for OpenStack . |

|

Prefer IPv6 |

Enables IPv6 to deploy all the Fabric Controllers, and the tunnel between hypervisor to V Series node using IPv6 address. If the IPv6 address is unavailable, it uses an IPv4 address. This functionality is supported only in OVS Mirroring. |

|

Enable Custom Certificates |

Enable this option to validate the custom certificate during SSL Communication. GigaVUE-FM validates the Custom certificate with the trust store. If the certificate is not available in Trust Store, communication does not happen, and an handshake error occurs. Note: If the certificate expires after the successful deployment of the fabric components, then the fabric components moves to failed state. |

|

Certificate |

Select the custom certificate from the drop-down menu. You can also upload the custom certificate for GigaVUE V Series Nodes, GigaVUE V Series Proxy, and UCT-V Controllers. For more detailed information, refer to Install Custom Certificate. |

Select Yes to configure a GigaVUE V Series Proxy.

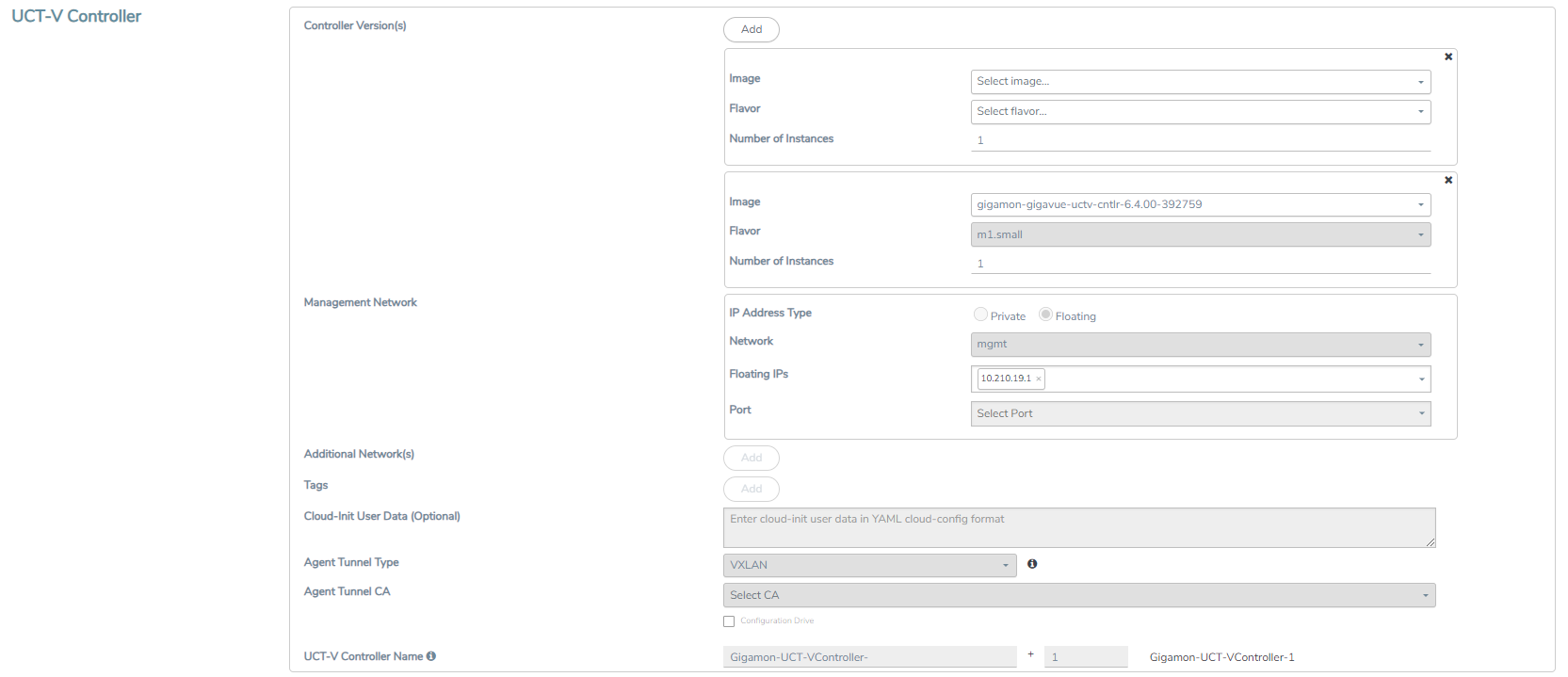

Configure UCT-V Controller

A UCT-V Controller manages multiple UCT-Vs and orchestrates the flow of mirrored traffic to GigaVUE V Series nodes. While configuring the UCT-V Controllers, you can also specify the tunnel type to be used for carrying the mirrored traffic from the UCT-Vs to the GigaVUE V Series nodes.

- Only if UCT-Vs are used for capturing traffic, then the UCT-V Controllers must be configured in the OpenStack cloud.

- A UCT-V Controller can only manage UCT-Vs that have the same version.

Enter or select the required information in the UCT-V Controller section as described in the following table.

Fields | Description | ||||||||||||

Controller Version(s) | The UCT-V Controller version that you configure must always have the same version number as the UCT-Vs deployed in the instances. For more detailed information refer GigaVUE‑FM Version Compatibility Matrix. Note: If there is a version mismatch between the UCT-V Controllers and UCT-Vs, GigaVUE‑FM cannot detect the agents in the instances. To add UCT-V Controllers:

| ||||||||||||

Management Network

| This segment defines the management network that GigaVUE‑FM uses to communicate with UCT-V Controllers, GigaVUE V Series Proxy, and GigaVUE V Series Nodes. Network - Select the management network ID. Ports - Select a port, you can choose a port related to the selected management network ID. IP Address Type The type of IP address GigaVUE‑FM needs to communicate with UCT-V Controllers:

| ||||||||||||

Additional Network(s) | (Optional) If there are UCT-Vs on networks that are not IP routable from the management network, additional networks or subnets must be specified so that the UCT-V Controller can communicate with all the UCT-Vs. Click Add to specify additional networks (subnets), if needed. Also, make sure that you specify a list of security groups for each additional network. Ports: Select a port associated with the network. | ||||||||||||

Tag(s) | (Optional) The key name and value that helps to identify the UCT-V Controller instances in your environment. For example, you might have UCT-V Controllers deployed in many regions. To distinguish these UCT-V Controllers based on the regions, you can provide a name (also known as a tag) that is easy to identify such as us-west-2-uctv-controllers. There is a specific UCT-V Controller Version for OVS Mirroring and OVS Mirroring + DPDK. To add a tag:

| ||||||||||||

| Cloud-Init User Data (Optional) | Enter the cloud-init user data in cloud-config format. | ||||||||||||

Agent Tunnel Type | The type of tunnel used for sending the traffic from UCT-Vs to GigaVUE V Series nodes. The options are GRE, VXLAN, and Secure tunnels (TLS-PCAPNG). | ||||||||||||

Agent Tunnel CA | The Certificate Authority (CA) that should be used in the UCT-V Controller for connecting the tunnel. | ||||||||||||

UCT-V Controller Name | (Optional) Enter the name of the UCT-V Controller. The UCT-V Controller name must meet the following criteria:

|

Configure GigaVUE V Series Proxy

The fields in the GigaVUE V Series Proxy configuration section are the same as those on the UCT-V Configuration page. Refer to Configure UCT-V Controller for the field descriptions.

Configure GigaVUE V Series Node

Creating a GigaVUE V Series node profile automatically launches the V Series node. Enter or select the required information in the GigaVUE V Series Node section as described in the following table.

|

Parameter |

Description |

|||||||||

| Image | Select the GigaVUE V Series node image file. | |||||||||

| Flavor | Select the form of the GigaVUE V Series node. | |||||||||

|

Management Network |

For the GigaVUE V Series Node, the Management Network is what is used by the GigaVUE V Series Proxy to communicate with the GigaVUE V Series Nodes. Select the management network ID. Ports— Select a port, you can choose a port related to the selected management network ID. Note: When both IPv4 and IPv6 addresses are available, IPv6 address is preferred, however if IPv6 address is not reachable then IPv4 address is used. |

|||||||||

|

Data Network |

Click Add to add additional networks. This is the network that the GigaVUE V Series node uses to communicate with the monitoring tools. Multiple networks are supported.

|

|||||||||

|

Tag(s) |

(Optional) The key name and value that helps to identify the UCT-V Controller instances in your environment. For example, you might have UCT-V Controllers deployed in many regions. To distinguish these UCT-V Controllers based on the regions, you can provide a name (also known as a tag) that is easy to identify such as us-west-2-uctv-controllers. To add a tag:

|

|||||||||

| Cloud-Init User Data (Optional) | Enter the cloud-init user data in cloud-config format. | |||||||||

|

Min Instances |

The minimum number of GigaVUE V Series nodes to be launched in OpenStack. The minimum number can be 1.

Note: GigaVUE-FM will delete the nodes if they are idle for over 15 minutes. |

|||||||||

|

Max Instances |

The maximum number of GigaVUE V Series nodes that can be launched in OpenStack. |

|||||||||

|

V Series Node Name |

(Optional) Enter the name of the V Series Node. The V Series Node name must meet the following criteria:

|

|||||||||

|

Tunnel MTU (Maximum Transmission Unit) |

The Maximum Transmission Unit (MTU) is applied on the outgoing tunnel endpoints of the GigaVUE-FM V Series node when a monitoring session is deployed. The default value is 1450. The value must be 42 bytes less than the default MTU for GRE tunneling, or 50 bytes less than default MTU for VXLAN tunnels. |

Click Save to save the OpenStack Fabric Launch Configuration.

To view the fabric launch configuration specification of a fabric node, click on a fabric node or proxy, and a quick view of the Fabric Launch Configuration appears on the Monitoring Domain page.