Configure CPN-UPN Communication Solution using Ansible

To configure CPN-UPN Communication using Ansible:

Create an Inventory Directory to store all the CPN-UPN Communication related configuration files.

username@fmreg26:~$ mkdir cupsSolution

Create fmInfo.yml file inside the inventory directory with the IP address, username, and password of GigaVUE-FM. This helps Ansible to fetch the details of GigaVUE-FM.

Create a json file ansible_inputs.json inside the inventory directory that contains the path of the below mentioned files. This allows Ansible to identify and access the below mentioned files.

- fm_credential_file - Provide the path of the fmInfo.yml file.

- yaml_payload_path - Created automatically while running the cups playbook. Provide the path of the file that stores the payload sent to GigaVUE-FM.

- golden_payload_path - The payload of a successful cups solution deployment is saved in this file. Provide the file path that has the golden payload.

- deployment_report_path - Created automatically while running the cups playbook. This file stores the report of the deployment. Provide the path of the file.

Create mobility_inventory file inside the inventory directory.

Enter the details in the file as shown in the below example:

[IPInterfaceSolution]

<Name of the Cluster or standalone device IP that contains the ports that need to be configured.>

[ToolGroups]

<Name of the Cluster or standalone device IP on which the Tool Group needs to be configured.>

<Name of the Cluster or standalone device IP on which the Tool Group needs to be configured.>

[Gigastreams]

<Name of the Cluster or standalone device IP on which the Gigastreams needs to be configured.>

[GTPWhitelist]

<Name of the Cluster or standalone device IP on which the GTPWhitelist Data Base needs to be configured.>

[Ports]

<Name of the Cluster or standalone device IP that contains the ports that need to be configured.>

[Policies]

<Name of the Global policy or policies.>

[CPN]

<Name of the CPN or CPNs.>

[UPN]

<Name of the CPN or CPNs.>

[Sites]

Site_Name1='["<Name of the CPN"]' upn_list='[]' sam_list='[]'

Site_Name2='[]' upn_list='["Name of the UPN"]' sam_list='[]'

[MobilitySolution]

<Name of the file CPN UPN Communication solution along with lts_policy file name, 5g_policy file name, lte_policy file name and list of sites participating in the CPN UPN Communication solution.>

Create host_vars directory inside the inventory directory to host the files that are used in the mobility_inventory file.

gigamon@fmreg26:~/cupsSolution$ mkdir host_vars

Each unique element like Ports, GigaStream, GTP forward list, and Tool Groups in the mobility_inventory file needs to have a file, with the same name as the element, inside host_vars directory.

For Example

- The element of name 'cpnUkLTE' under group 'CPN' has a file with name 'cpnUkLTE' inside host_vars directory. This file has the properties of the CPN.

- The element of name 'cluster-one' has a file with name 'cluster-one' inside host_vars directory. This has the properties of all the groups like Ports, IPInterface, ToolGroups, etc that the element is a member.

Below are a sample of a host_vars files.

Prerequisite:

---

validate_certs: false

Ports:

- port:

- 1/1/x1

- 1/1/x2

adminStatus: enable

type: network

GTPWhitelist:

- alias: gtp1

imsi: 310260564627811,310260564627812

state: present

- alias: gtp2

inputFile: './whitelistKeys/TenIMSIs_Valid.txt'

state: present

Gigastreams:

- alias: toolGS_C11

ports:

- 4/1/x1

- 4/1/x2

type: hybrid

state: present

- alias: toolGS_C12

ports:

- 4/1/x3..x4

type: hybrid

state: present

ToolGroups:

- alias: pgGrp_C11

ports:

- 2/1/x1

smartLb: false

type: tool

state: present

- alias: pgGrp_C12

ports:

- 2/1/x2

smartLb: false

type: tool

state: presentSite

Mobility Solutions are generally spread across multiple geographically dispersed data centers called sites.

A site is a collection of the following:

| Network element functions. |

| Traffic access points for interfaces of such network element functions. |

| Visibility and Analytics Fabric (VAF) nodes. |

| Traffic monitoring or analysis tool devices (called probes) that are locally connected without using any IP routed tunnels. |

---

Site:

# Name of the site

alias: UK

# 'skipDeployment' attribute is 'false' for the sites that are intended to be deployed in the incremental deployment process

skipDeployment: true

# Tag values assigned to the site

tags:

- tagKey: Location

tagValues:

- UK

# All the tools used in the site

toolBindings:

- alias: GeoProbe

toolResourceType: GIGASTREAM

toolClusterId: cluster-one

toolResourceId: toolGS_C11

- alias: EEA

toolResourceType: PORTGROUP

toolClusterId: cluster-one

toolResourceId: pgGrp_C11

# Network Ports. Fill as needed in the format 'clusterid:portId'

networkPorts: []

# Policies under 'siteOverrideOfPolicyArrangements' override global policies

siteOverrideOfPolicyArrangements:

forLTE:

_file: /home/ddaniel/automationInventoryDirectory/host_vars/lte_policy_1.yml

for5G: {}

# Leave upNodes and cpNodes as empty

upNodes: []

cpNodes: []cpNode

--

ProcessingNode:

# Name of the control processing node

alias: cpnUkLTE

# Tags assigned to the processing node

tags:

- tagKey: Dept

tagValues:

- IT

- Engg

# Type of control node. Possible values for the nodeType are: 'PCPN_LTE', 'PUPN', 'PCPN_5G'

nodeType: PCPN_5G

# Location of the gigasmart engine port assigned to the processing node

location:

clusterId: cluster-one

enginePorts:

- 2/3/e1

# IP interface that needs to be used by the processing node

ipInterfaceAlias: dev3_IpIntUpn_1

gtpControlSample: false

gtpRandomSampling:

enabled: false

# min: 12, max: 48, multiples of 12hrs

interval: 12

numberOfLteSessions: 100000

numberOf5gSessions: 100000

# GS Group HTTP2 port list

app5gHTTP2Ports:

- 8080

- 9000

# Network Ports. Fill as needed in the format 'clusterid:portId'

nodeOverrideNetworkPorts: []

trafficSources:

- networkFunctionName: cpn_pod1_SGW-C

networkFunctionType: SGW-C

tags:

- tagKey: Dept

tagValues:

- IT

- Engg

networkFunctionInterfaces:

- tunnelIdentifiers:

- interfaceTunnelIdentifierType: IPADDRESS

value: 255.255.255.0

netMask: 198.51.100.42

- interfaceTunnelIdentifierType: PORT

value: 8805

interfaceType: Sxa

# Network Ports. Fill as needed in the format 'clusterid:portId'

sourceOverrideNetworkPorts:

- 192.168.65.8:8/1/x3

#TCP loadbalancing properties applicable only for nodeType PCPN_5G

appTcp:

# Possible values for application are : 'broadcast', 'enhanced', 'drop'

application: broadcast

# Possible values for tcpControl are : 'broadcast', 'enhanced', 'drop'

tcpControl: broadcast

# To enable loadbalancing set value as true

loadBalance: falseupNode

---

ProcessingNode:

# Name of the control processing node

alias: upnDallas

# Tags assigned to the processing node

tags:

- tagKey: Dept

tagValues:

- IT

- Engg

# Type of user node. Possible values for the nodeType are: 'PCPN_LTE', 'PUPN', 'PCPN_5G'

nodeType: PUPN

# To make user node as standalone

standAloneMode: true

# Location of the gigasmart engine port assigned to the processing node

location:

clusterId: cluster-two

enginePorts:

- 6/3/e1

- 6/3/e2

# IP interface that needs to be used by the processing node

ipInterfaceAlias: dev3_IpIntUpn_1

gtpControlSample: false

gtpRandomSampling:

enabled: false

# min: 12, max: 48, multiples of 12hrs

interval: 12

# Network Ports. Fill as needed in the format 'clusterid:portId'

nodeOverrideNetworkPorts: []

trafficSources:

- networkFunctionName: upn_pod1_SGW-U

networkFunctionType: SGW-U

tags:

- tagKey: Dept

tagValues:

- IT

- Engg

networkFunctionInterfaces:

- tunnelIdentifiers:

- interfaceTunnelIdentifierType: IPADDRESS

mask: 255.255.255.0

address: 198.58.100.45

- interfaceTunnelIdentifierType: PORT

value: 8805

interfaceType: Sxa

# Network Ports. Fill as needed in the format 'clusterid:portId'

sourceOverrideNetworkPorts:

- 192.168.65.9:9/1/x4

- 192.168.65.9:9/1/x5

- networkFunctionName: upn_pod2_UPF

networkFunctionType: UPF

tags:

- tagKey: Dept

tagValues:

- IT

- Engg

networkFunctionInterfaces:

- tunnelIdentifiers:

- interfaceTunnelIdentifierValue:

mask: 255.255.255.0

address: 198.58.100.46

interfaceTunnelIdentifierType: IPADDRESS

- interfaceTunnelIdentifierValue:

value: '2152'

interfaceTunnelIdentifierType: PORT

interfaceType: N11

sourceOverrideNetworkPorts:

- 192.168.65.9:9/1/x65GPolicy

---

5GPolicy:

# gtpFlowTimeout is multiplied by 10 minutes to arrive at a timeout interval. (gtpFlowTimeout: 48 = 8 hours). Set this interval to match customer network's GTP session timeout for optimal results

gtpFlowTimeout: 48

# gtpPersistence -- save state tables during reboot or box failure. Remove if not using persistence

gtpPersistence:

# interval in minutes to save state table (min value is 10)

interval: 10

restartAgeTime: 30

fileAgeTimeout: 30

sampling:

flowMaps:

- alias : samplingMap2

rules:

- interface:

dnn: internet.miracle

pei: '*'

supi: 46*

gpsi:

nas_5qi:

tac:

nci:

plmndId:

nsiid:

# -- "controlPlanePercentage: specifies percentage of sampling at CPN (set to 100 for no CPN sampling)

controlPlanePercentage: 100

# -- userPlanePercentage: specifies percentage of sampling at UPN (will be applied to all UPNs -- use site override to set different rates per site)

userPlanePercentage: 50

tool: EEA

whitelisting:

whiteListAlias: gtp1

flowMaps:

- alias : whitelistMap2

rules:

- dnn: internet.miracle

interface:

- supi:

ran:

tool: EEA

loadBalancing:

# one of { flow5g }

appType: flow5g

# metric is the load balancing method -- one of { leastBw, leastPktRate, leastConn, leastTotalTraffic, roundRobin, wtLeastBw, wtLeastPktRate, wtLeastConn, wtLeastTotalTraffic, wtRoundRobin, flow5gKeyHash}

metric: flow5gKeyHash

# hashingKey -- ignored if metric is not of type 'flow5gKeyHash' -- one of { supi | pei| gpsi }

hashingKey: supiLTEPolicy

---

LTEPolicy:

# gtpFlowTimeout is multiplied by 10 minutes to arrive at a timeout interval. (gtpFlowTimeout: 48 = 8 hours). Set this interval to match customer network's GTP session timeout for optimal results

gtpFlowTimeout: 48

# gtpPersistence -- save state tables during reboot or box failure. Remove if not using persistence

gtpPersistence:

# interval in minutes to save state table (min value is 10)

interval: 10

restartAgeTime: 30

fileAgeTimeout: 30

sampling:

flowMaps:

- alias : samplingMap1

rules:

- interface:

version: any

apn: internet.miracle

imei: 123*

imsi:

msisdn:

qci:

# -- controlPlanePercentage: specifies percentage of sampling at CPN (set to 100 for no CPN sampling)

controlPlanePercentage: 100

# -- userPlanePercentage: specifies percentage of sampling at UPN (will be applied to all UPNs -- use site override to set different rates per site)

userPlanePercentage: 50

tool: GeoProbe

whitelisting:

whiteListAlias: gtp1

flowMaps:

- alias : whitelistMap1

rules:

- version: v1

interface:

apn:

tool: GeoProbe

loadBalancing:

# one of { gtp | aft | tunnel }

appType: gtp

# metric is the load balancing method -- one of # { leastBw, leastPktRate, leastConn, leastTotalTraffic, roundRobin, wtLeastBw, wtLeastPktRate, wtLeastConn, wtLeastTotalTraffic, wtRoundRobin, gtpKeyHash}

metric: gtpKeyHash

# hashingKey -- ignored if metric is not of type 'gtpKeyHash' -- one of { imsi | imei | msisdn }

hashingKey: imsiTo deploy the CPN-UPN Communication solution, follow these steps:

- Set an additional environment variable to search the login details of the GigaVUE-FM IP in fmInfo.yml file as follows.

export ANSIBLE_FM_IP=<GigaVUE-FM IP Address>

- Execute the playbook and deploy the CPN-UPN Communication solution using the following command:

- If fmInfo.yml file is not encrypted, use the following command:

/usr/bin/ansible-playbook -e ‘@~/cupsSolution/ansible_inputs.json’ -i ~/cupsSolution/cups_inventory /usr/local/share/gigamon-ansible/playbooks/cups/deploy_cups.yml - If IfmInfo.yml file is encrypted, use the following command:

/usr/bin/ansible-playbook -e ‘@~/cupsSolution/ansible_inputs.json’ -i ~/cupsSolution/cups_inventory /usr/local/share/gigamon-ansible/playbooks/cups/deploy_cups.yml

- If fmInfo.yml file is not encrypted, use the following command:

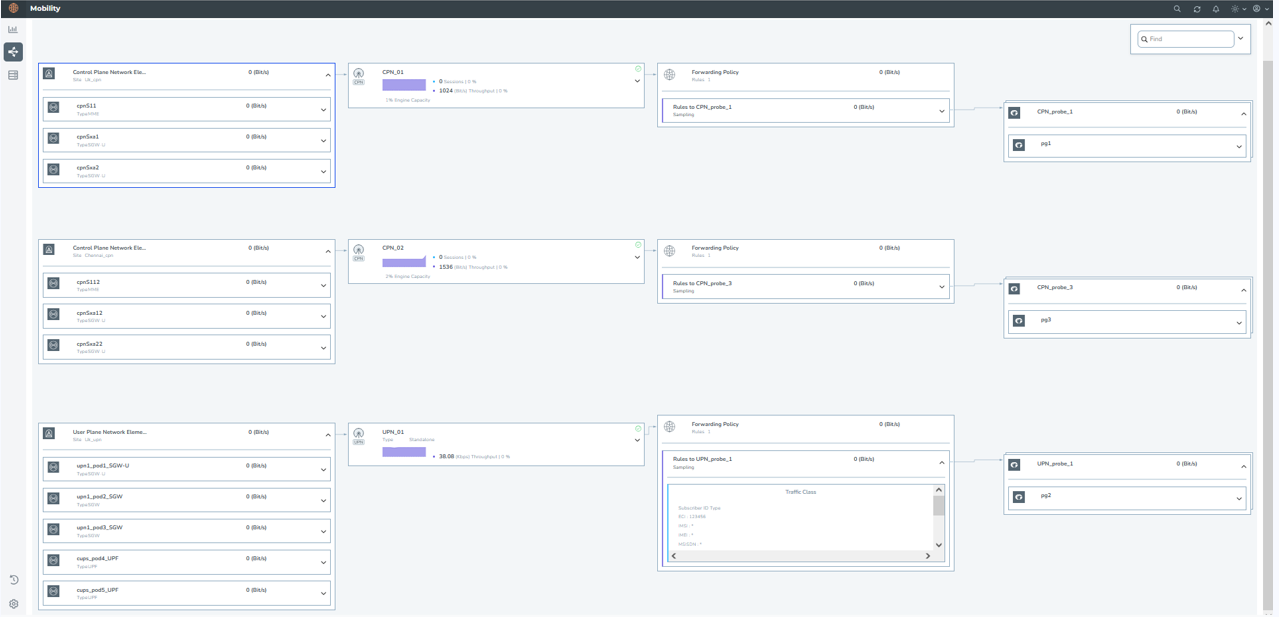

After deploying the CPN-UPN Communication in Ansible, you can view how the communication is established between CPN and UPN in GigaVUE-FM. Follow the steps given below:

- In GigaVUE-FM, go to Traffic > Physical > Orchestrated Flows.

- Click Mobility.

- Click the Alias.

The following image shows the CPN-UPN communication solution:

After configuring CPN-UPN Communication solution, you can view the analytics and statistics in the CUPS Communication dashboard. Refer to View CUPS Communication Dashboard for more detailed information.

Remove CPN-UPN Communication

To remove the CPN-UPN Communication solution, follow these steps:

| Execute the playbook and use the following command: |

/usr/local/bin/ansible-playbook -e '@~/<Path to Inventory directory>/ansible_inputs.json' -i ~/<Path to Inventory directory>/mobility_inventory /<Path to Ansible playbook>/delete_mobility_solution.yml

Note: The multiple YML files created inside the host_vars are concatenated, converted into JSON format and sent to GigaVUE-FM.